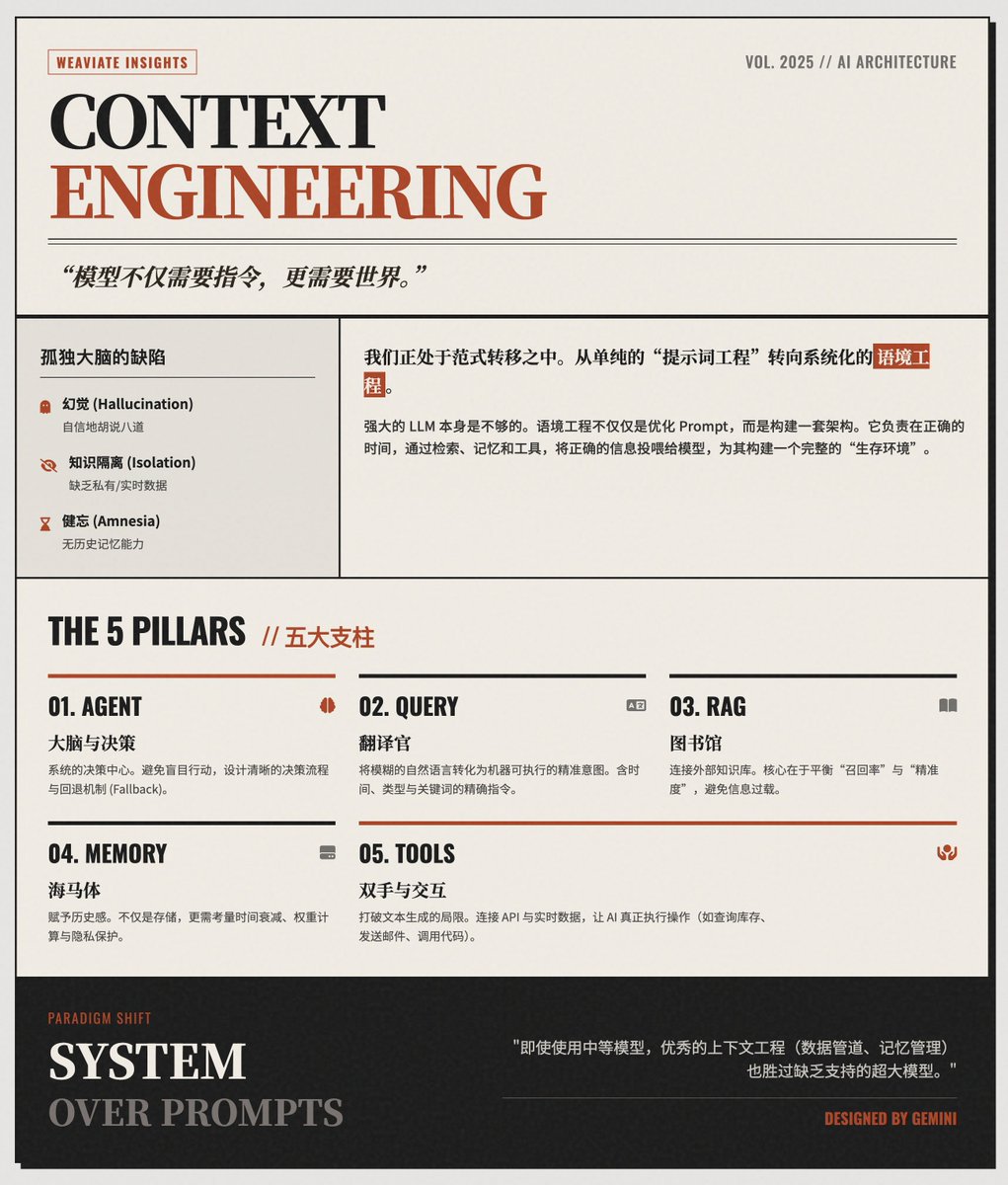

Context Engineering for AI Agents @weaviate_io's latest blog post systematically re-examines "context engineering" in AI agents, building a complete living environment for the model that includes memory, tools, and a knowledge base. The accompanying illustrations are still excellent, and it greatly helps in understanding the theory and methods. I recommend friends read the original article. Key takeaway: Models need not only "instructions," but also a "world." It raises a counterintuitive but crucial point: a powerful LLM on its own is not enough. Even the smartest models are "lone brains." They face three major inherent flaws: • Illusion: Confidently spouting nonsense. • Knowledge isolation: lack of specific private data or real-time world knowledge. • No memory: Cannot remember the conversation from the previous second. Context engineering is precisely designed to solve these problems: It's not just about writing better prompts; it's about building an architecture that ensures the right information is fed to the model at the right time. Its goal is to connect isolated models with the real world, giving models a complete "context" when reasoning. The article "Five Pillars of Context Engineering" breaks down context engineering into five core architectural patterns, which also serve as a blueprint for building production-ready AI applications: • Agent: The brain, serving as the decision-making center of the system; to prevent the model from acting blindly, a clear decision-making process and fallback mechanism need to be designed. • Query Augmentation: A translator that transforms the user's ambiguous natural language into precise, machine-executable intents; for example, transforming "Find the document mentioned in last week's meeting" into a precise search instruction including time, document type, and keywords. • Retrieval: A library that connects to external knowledge bases; its core lies in balancing "recall" and "accuracy" to avoid information overload or the omission of key information. • Memory: The hippocampus gives the system a sense of history and learning ability; memory is not simply data storage, but needs to take into account time decay, importance weighting and privacy protection. • Tools: Enable AI to interact with real-time data and APIs; allow AI to move beyond simply generating text and actually perform operations (such as checking inventory or sending emails). Methodological Paradigm Shift: Past (Cue Word Engineering): Not only relying on the intelligence of the model itself, but also spending a lot of time adjusting the wording of the Prompt. • Now (Context Engineering): Focus on system design. Even with a model of medium parameter size, if context engineering (data pipeline, retrieval quality, memory management) is done well, it often outperforms a system that simply uses a very large model but lacks context support. Read the original text

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.