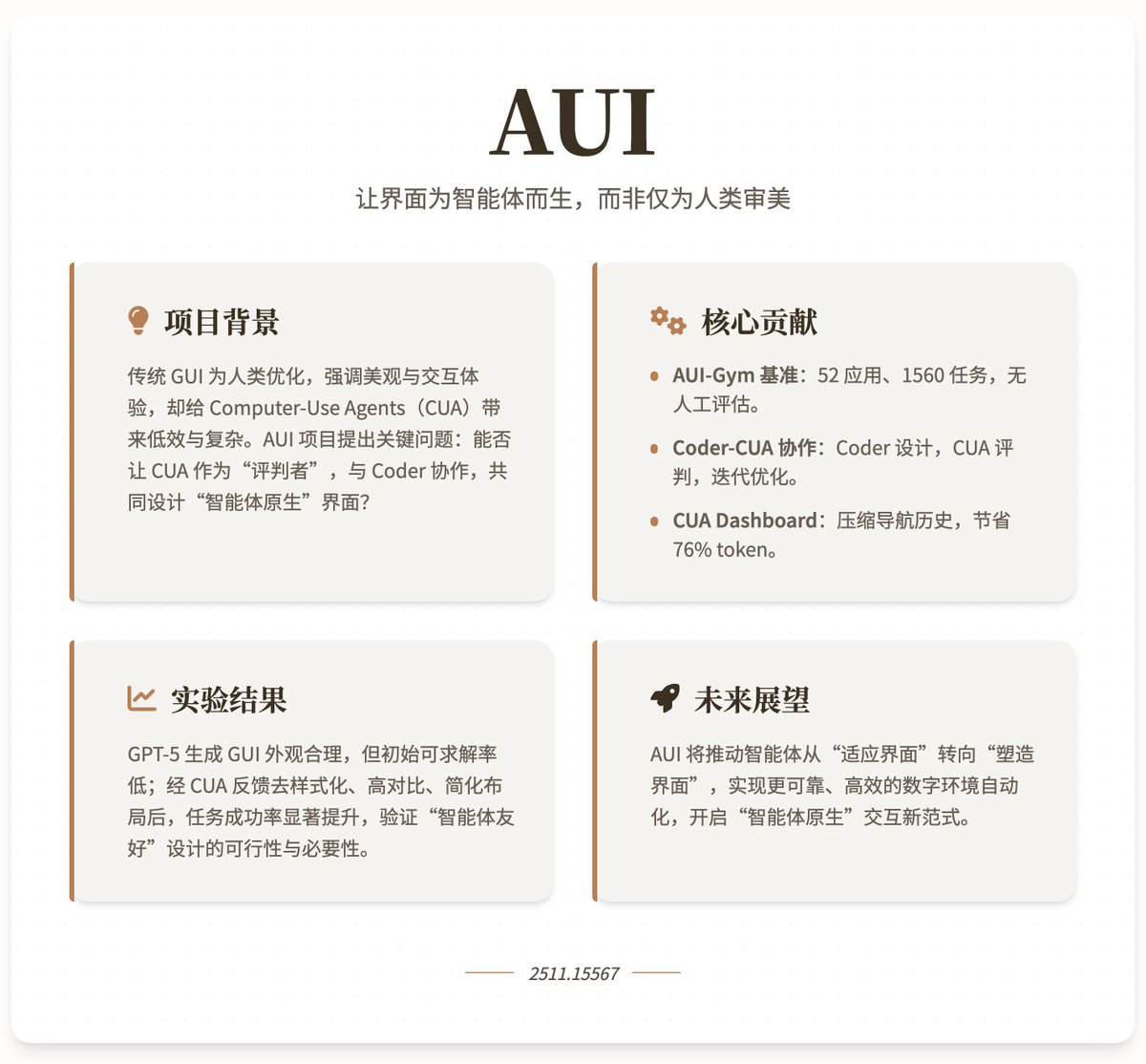

With Gemini 3.0 Pro and Claude Opus 4.5 continuing to upgrade their UI generation capabilities, is there any future for front-end developers? 😂 Just kidding 😄 AI models are so good at generating UIs that they are very user-friendly, but are the UIs they generate user-friendly for AI agents? The latest research, "AUI," from Oxford University, the National University of Singapore, and Microsoft, explores how to use Computer-Use Agents (CUA) and coding language models to automatically generate and optimize GUIs, making the interface more suitable for intelligent agents rather than humans. Project Background and Motivation: Traditional GUIs are primarily optimized for humans, emphasizing aesthetics, usability, and visual appeal (such as animations and colorful layouts). This forces CUAs to mimic human behavior during operation, increasing complexity and inefficiency. With advancements in programming language models for automatically generating functional websites, the AUI project raises a key question: Can CUAs act as "judges" to assist coders in automatically designing GUIs? This collaboration aims to create "agent-native" interfaces, prioritizing task efficiency over human aesthetics. Through agent feedback, the project hopes to achieve more reliable and efficient automation of digital environments, driving a shift in agents from passively adapting to actively shaping their environments. Core contributions 1. AUI-Gym Benchmark Platform: This is a benchmark designed specifically for automated GUI development and testing, covering 52 applications across 6 domains (App, Landing, Game, Interactive, Tool, and Utility). The project uses GPT-5 to generate 1560 tasks simulating real-world scenarios (30 per application) and ensures quality through human verification. These tasks emphasize functional completeness and interactivity, such as creating habits and viewing charts in the "Micro Habit Tracker" application. Each task is equipped with a rule-based validator that checks via JavaScript whether the task is executable in a given interface, enabling reliable evaluation without human intervention. Benchmark metrics include: • Function Completeness (FC): Assess whether the interface supports the task (i.e., whether a function checker exists), as a basic usability measure. • CUA Success Rate (SR): Evaluates the average completion rate of CUA during navigation tasks, reflecting actual execution efficiency. 2. Coder-CUA Collaboration Framework: The framework positions the Coder (programming language model) as the "designer," responsible for initializing and iteratively revising the GUI; the CUA acts as the "judge," providing guidance through task solving and navigation feedback. Specific workflow: Coder generates an initial HTML website from user queries (including name, goal, function, and theme). • CUA test website: First, verify the solvability of the task (collect infeasible tasks as functional feedback), and then perform navigation (through atomic actions such as clicks and input). • Feedback loop: Unsolvable tasks are summarized into a language summary for coders to improve functionality; navigation trajectories are compressed into visual feedback through the CUA Dashboard to help coders optimize layout. 3. CUA Dashboard: Used to condense the multi-step navigation history of CUA (including screenshots, actions, and results) into a single 1920×1080 image. By adaptively cropping key interaction areas, it reduces visual tokens by an average of 76.2% while retaining necessary cues (such as task objectives, steps, and failure points). This makes feedback more interpretable, allowing coders to identify issues (such as low contrast or complex layouts) and make targeted revisions, such as removing styles, increasing contrast, or simplifying the structure. Experimental results show that advanced coders (such as GPT-5) can generate visually appealing GUIs, but their initial functional completeness is low (many tasks are unsolvable). This can be rapidly improved through failure feedback. CUA navigation is the main bottleneck; even with complete functionality, the initial success rate is low. However, through collaborative iteration (such as de-stylization, high contrast, and simplified layout), the success rate significantly improves, demonstrating that agent feedback enhances the robustness and efficiency of the GUI. The project emphasizes that agents prefer simple, function-oriented interfaces rather than human-like visual complexity. Research project address

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.