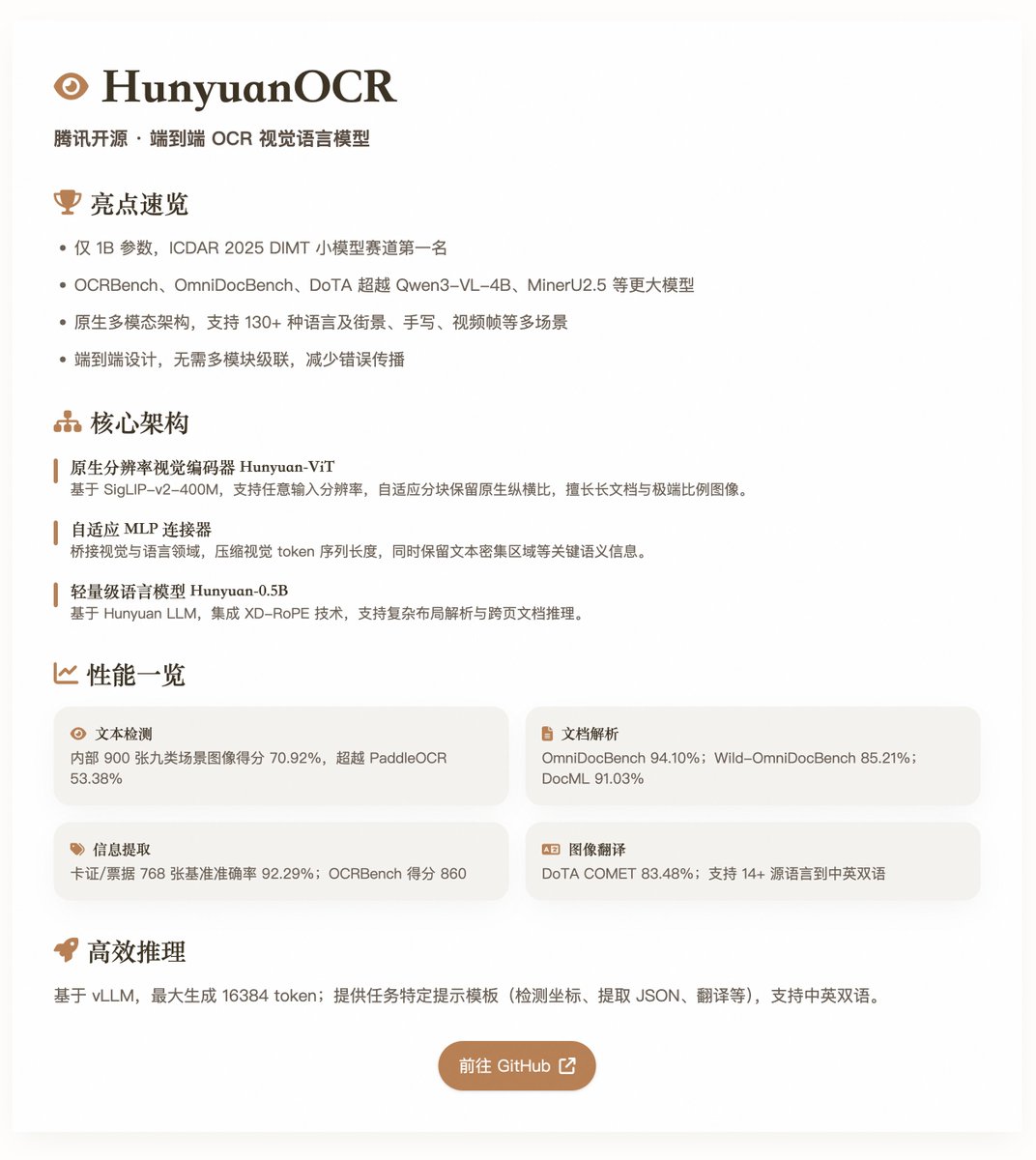

HunyuanOCR: Tencent's open-source end-to-end OCR visual language model HunyuanOCR, with a parameter size of only 1B, has achieved leading performance in multiple OCR benchmark tests. Based on a native multimodal architecture, it is optimized for OCR tasks and suitable for scenarios such as text detection, document parsing, information extraction, visual question answering, and text-image translation. The model achieved first place in the ICDAR 2025 DIMT Challenge (small model track) and outperformed many larger-scale models, such as Qwen3-VL-4B and MinerU2.5, on benchmarks such as OCRbench, OmniDocBench, and DoTA. Core features and architecture HunyuanOCR employs a pure end-to-end visual language model design, avoiding the multi-module cascading of traditional OCR systems, thereby reducing error propagation and maintenance costs. Its architecture comprises three main components: • Native Resolution Visual Encoder (Hunyuan-ViT): Based on the SigLIP-v2-400M pre-trained model with approximately 0.4B parameters, it supports arbitrary input resolutions. It preserves the original aspect ratio of images through an adaptive block division mechanism and excels at handling long documents, images with extreme aspect ratios, and low-quality scanned documents. • Adaptive MLP Connector: Serving as a bridge between the vision and language domains, it performs spatial content compression, reduces the length of visual token sequences, and preserves key semantic information, such as dense text regions. • Lightweight Language Model (Hunyuan-0.5B): Based on Hunyuan LLM, with approximately 0.5B parameters, it integrates XD-RoPE technology, decomposing RoPE into four subspaces: text, height, width, and time, supporting complex layout parsing and cross-page document reasoning. The model supports unified modeling across multiple tasks, completing tasks from perception to semantics through natural language instructions (such as "detect and recognize text in an image") without requiring additional preprocessing modules. It covers multiple languages (130+, including low-resource languages) and multiple scenarios (such as street view, handwriting, and video frames), and emphasizes high-quality application-aligned data and reinforcement learning (RL) optimization during training to improve robustness in complex scenarios. Training and Data Construction (2 minutes) • Pre-training phase: This phase consists of four steps, totaling approximately 454B tokens. It includes visual-language alignment, multimodal pre-training, long context expansion (up to 32K tokens), and application-oriented supervised fine-tuning. The data mixes open-source datasets, synthetic element-level data, and end-to-end application data, totaling approximately 200 million high-quality samples, covering nine scenarios including street view, documents, and handwriting. • Post-training phase: The online reinforcement learning algorithm GRPO is used, combined with task-specific reward mechanisms (such as rule-based and LLM-as-a-judge). This significantly improves the model's accuracy and stability on challenging tasks such as document parsing and translation. The data pipeline emphasizes compositing and enhancement: extending the SynthDog framework to generate long, multilingual documents, supporting RTL layouts and complex fonts; introducing a distortion compositing pipeline to simulate real-world defects (such as blurring, distortion, and lighting changes); and an automated QA generation pipeline that reuses cross-task samples to ensure diversity and quality. Performance Evaluation - Text Detection (Spotting): Score of 70.92% on an internal benchmark of 900 images (nine scene categories), outperforming PaddleOCR (53.38%) and Qwen3-VL-235B (53.62%). • Document parsing: Overall score of 94.10% on OmniDocBench, with a text edit distance of 0.042; Wild-OmniDocBench (realistic scene capture) score of 85.21%; DocML (multilingual) score of 91.03%. • Information extraction and VQA: 92.29% accuracy on a benchmark of 768 cards/tickets; 92.87% video caption extraction; OCR Bench score of 860. • Text and image translation: Supports 14+ source languages to Chinese/English, with a COMET score of 83.48% on DoTA and 73.38% (others to English)/73.62% (others to Chinese) on DocML. These results highlight the model’s efficiency at a lightweight level, especially in real-world scenarios where it outperforms modular VLMs and traditional pipelines. @vllm_project supports efficient inference, generating a maximum of 16384 tokens. The report provides task-specific hint templates, such as coordinate detection, JSON extraction, or translation, and supports both Chinese and English. Open source model:

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.