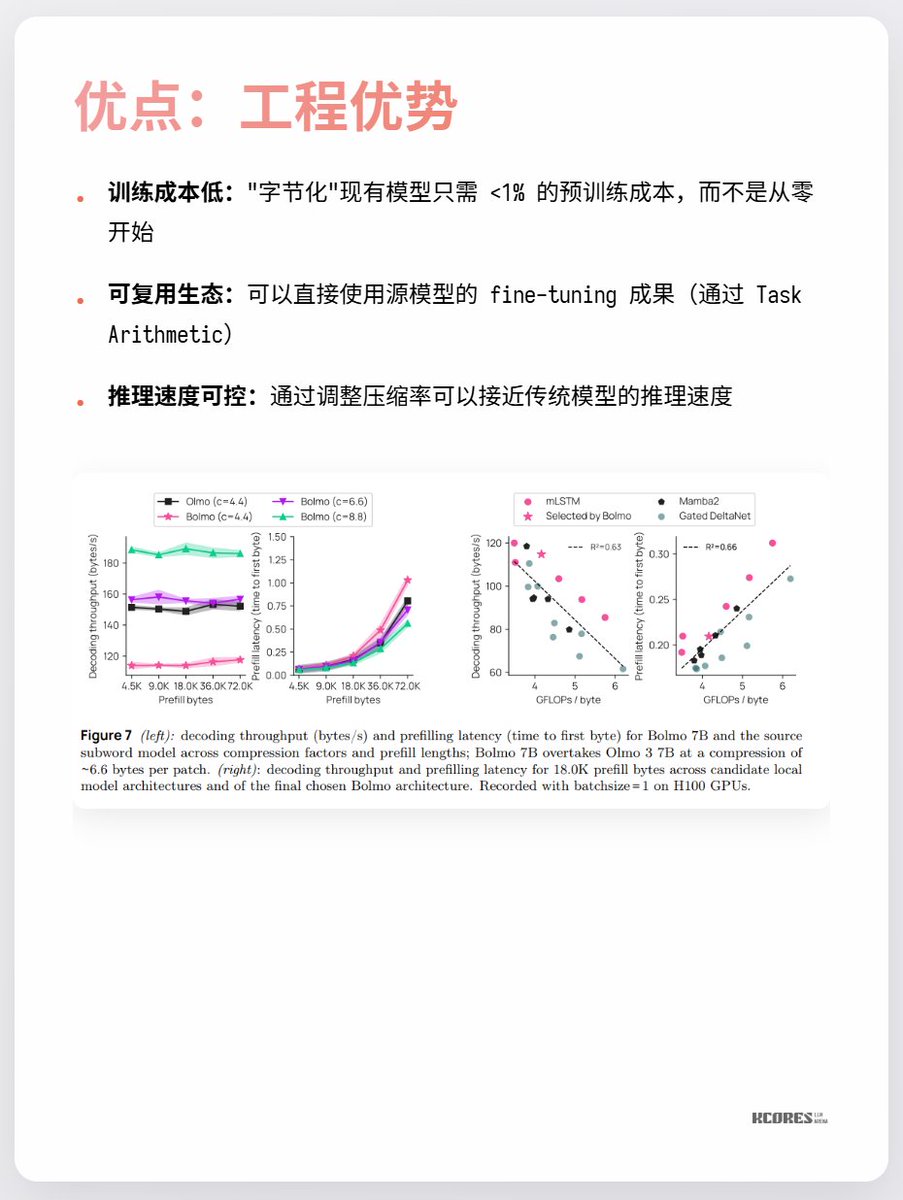

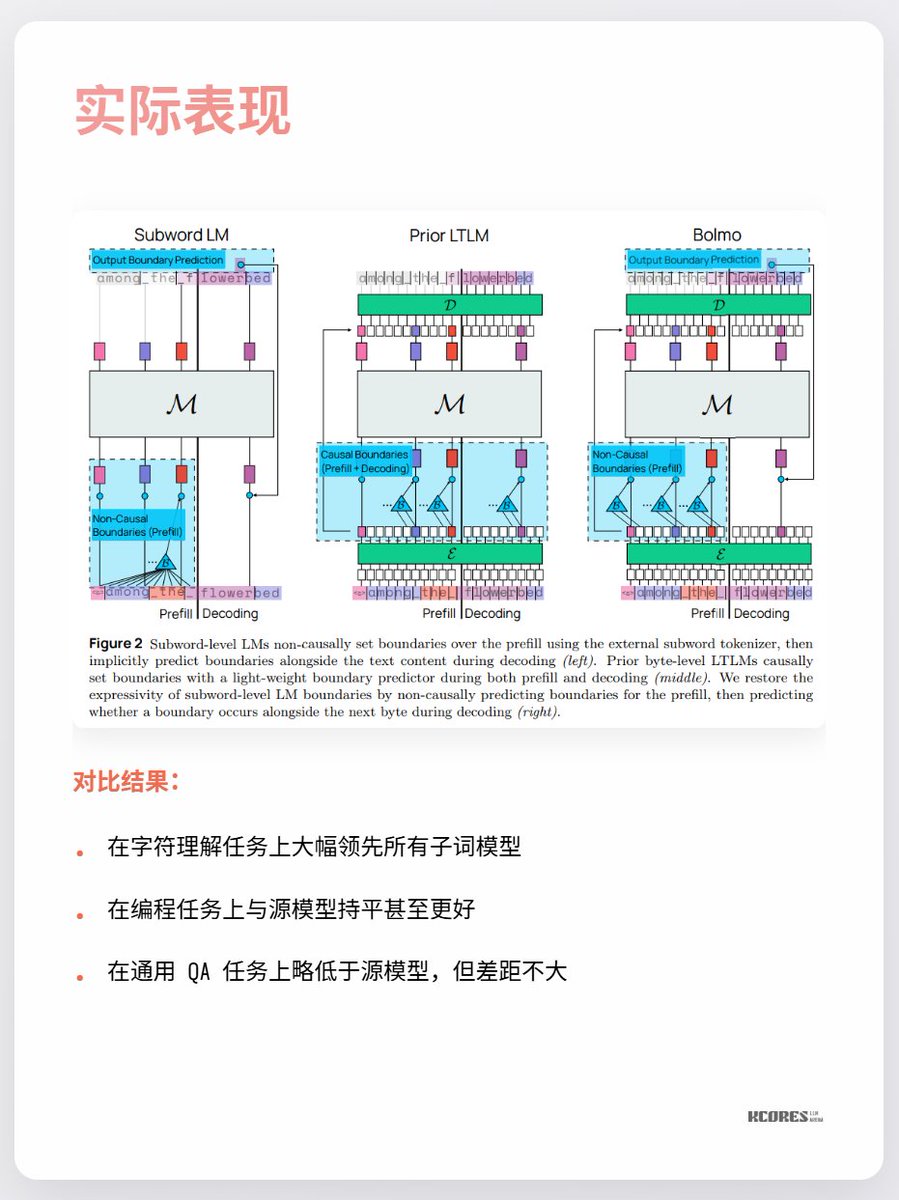

Bolmo's model takes a clever approach: instead of training from scratch, it "byte-encodes" the existing model. It has a built-in Local Encoder/Decoder that compresses byte sequences into "potential tokens" before feeding them into a traditional Transformer for processing. This allows for conversion with minimal overhead.

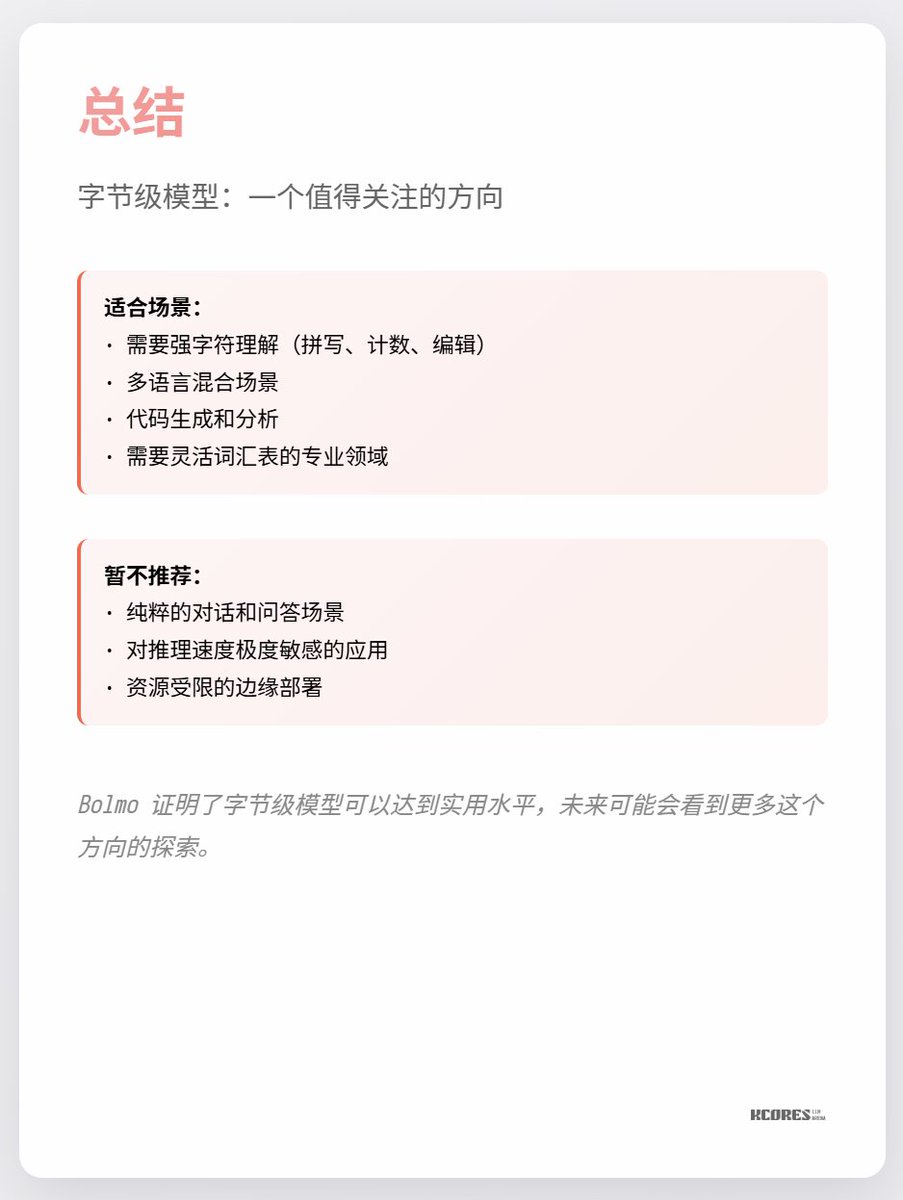

The biggest points of contention right now are that there isn't much benefit to be seen, and that longer sequences mean more key-value cache, putting more pressure on GPU memory. Also, the significant lead is only seen in the single task of character understanding, with little notable improvement in other tasks. In short, it's worth keeping an eye on. The spiral exploration during periods of technological breakthroughs is always very interesting. For example, I personally liked mercury rectifiers (last picture), but they have now been replaced by IGBTs.