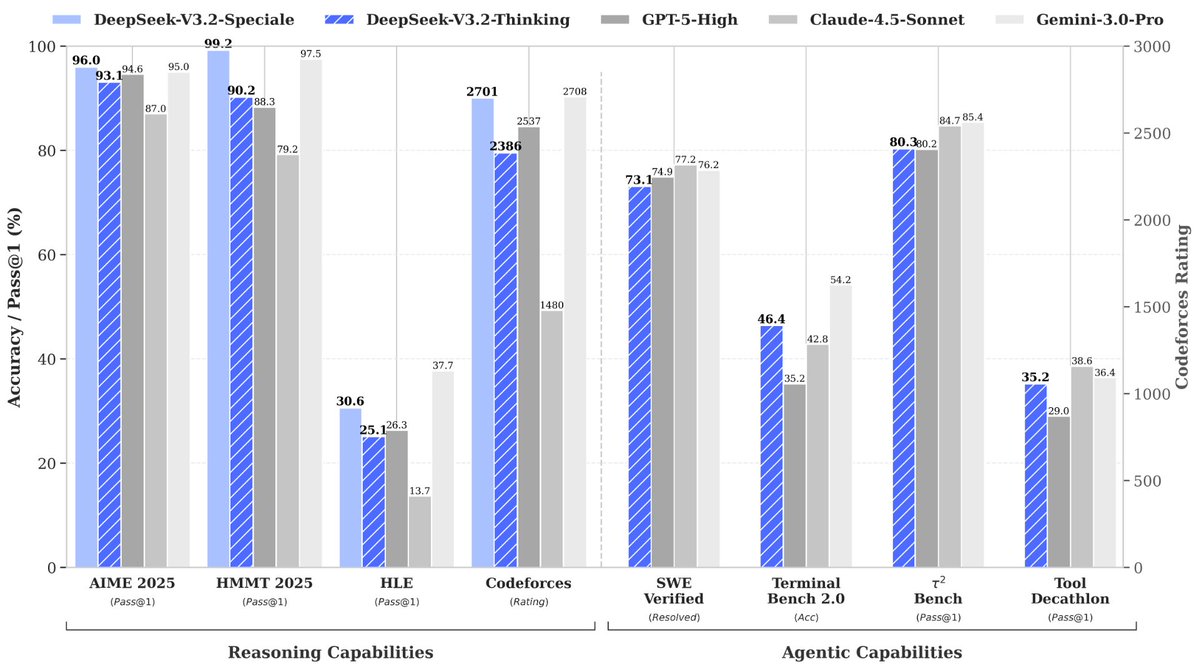

DeepSeek just released its latest V3.2 model, which focuses on efficient inference and powerful agent capabilities. The introduction of the Sparse Attention (DSA) mechanism significantly reduces computational complexity while maintaining model performance, and is specifically optimized for long context scenarios. A breakthrough has been achieved in large-scale agent task synthesis, integrating reasoning capabilities into tool invocation scenarios and significantly improving the model's performance in complex interactive environments. Model address: https://t.co/VwGFgEylOz At the same time, an enhanced version, DeepSeek-V3.2-Speciale, was also released, and its performance was even more outstanding. With the support of reinforcement learning framework, its reasoning ability is said to surpass GPT-5 and be comparable to Gemini-3.0-pro, and it has won gold medals in international competitions such as IMO 2025 and IOI. Model address: https://t.co/yWt5pX9UH2 In addition, the Chat Template has been updated to support the "thinking with tools" capability, and a new developer role has been added specifically for the search agent. Overall, this upgrade to DeepSeek is quite substantial and worth paying attention to.

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.