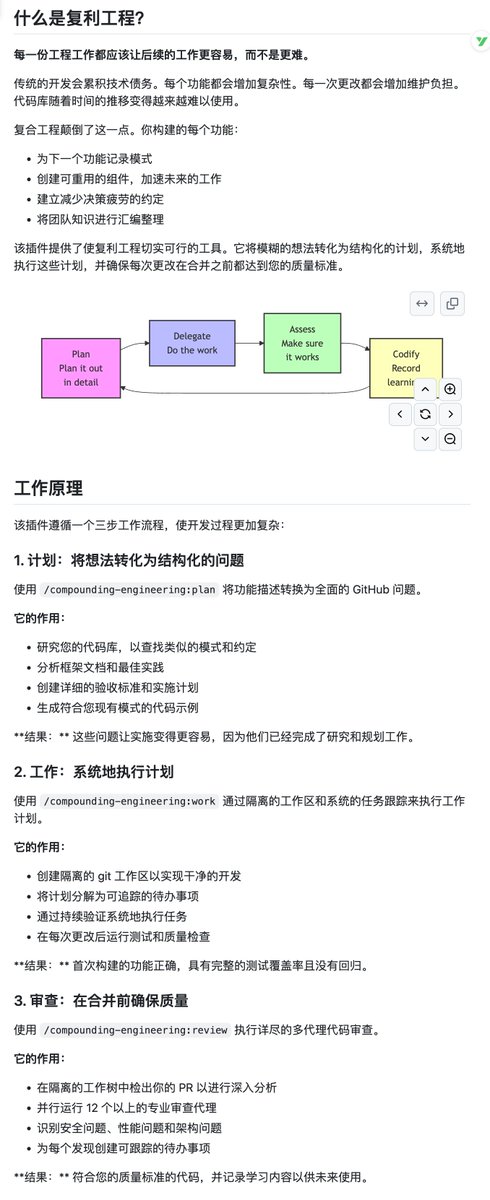

When using AI-assisted programming, the most difficult part is often not writing code, but rather ensuring that the AI strictly adheres to the team's existing engineering standards, rather than generating code "at will" every time. I stumbled upon the open-source project Compounding Engineering Plugin, which proposes a very hardcore Plan-Work-Review engineering workflow, aiming to accumulate quality with every AI development effort rather than creating technical debt. It is not a simple "memory storage", but rather generates detailed issues by deeply analyzing the codebase pattern during the Plan phase, then executes development using an isolated environment (Worktree), and finally reviews them in parallel by more than 10 dedicated agents. GitHub: https://t.co/9LLs5a3SpL What's most interesting is its review mechanism, which includes built-in professional agents such as `security-sentinel` and `performance-oracle`, allowing it to perform a comprehensive check-up on the code, much like a senior human engineer. If you want Claude Code to be more than just a "code generator," but a disciplined "virtual colleague" who can not only write but also self-censor, this plugin is well worth exploring.

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.