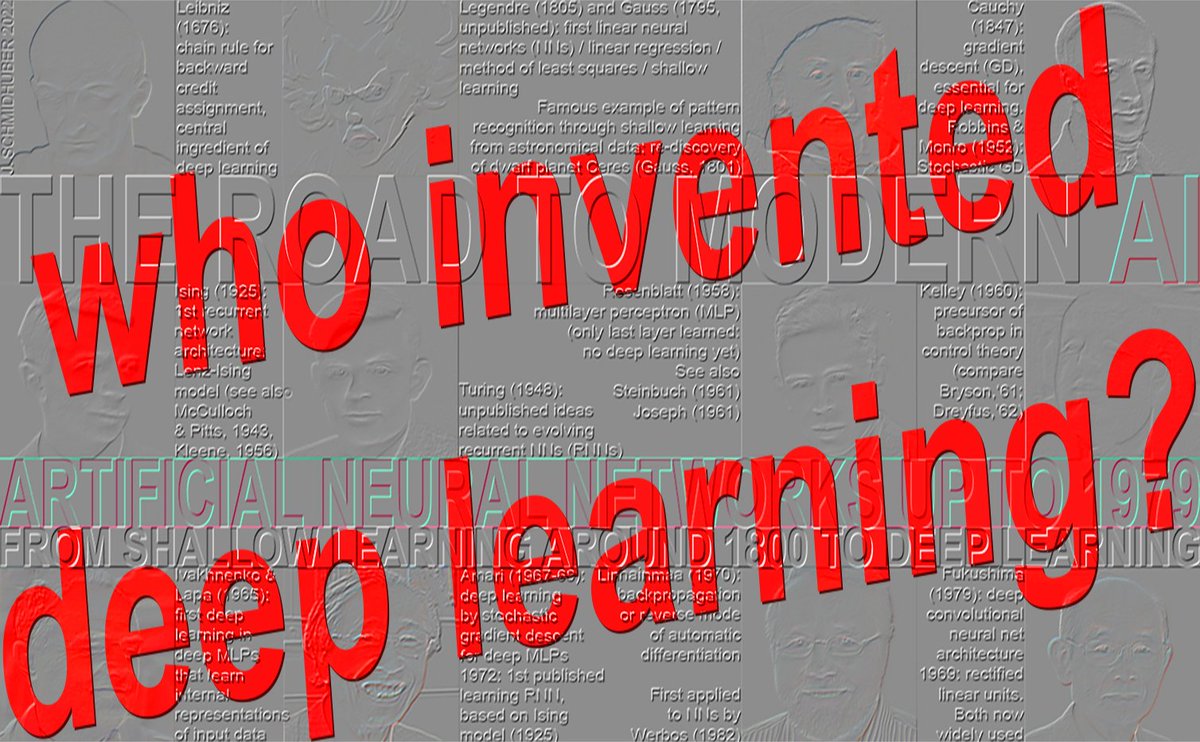

Who invented deep learning? people.idsia.ch/~juergen/who-i… Timeline: 1965: first deep learning (Ivakhnenko & Lapa, 8 layers by 1971) 1967-68: end-to-end deep learning by stochastic gradient descent (Amari, 5 layers) 1970: backpropagation (Linnainmaa, 1970) for NNs (Werbos, 1982): rarely >5 layers (1980s) 1991-93: unsupervised pre-training for deep NNs (Schmidhuber, others, 100+ layers) 1991-2015: deep residual learning (Hochreiter, others, 100+ layers) 1996-: deep learning without gradients (100+ layers) ★ 1965: first deep learning (Ivakhnenko & Lapa, 8 layers by 1971) Successful learning in deep feedforward network architectures started in 1965 in Ukraine (back then the USSR) when Alexey Ivakhnenko & Valentin Lapa introduced the first general, working learning algorithms for deep multi-layer perceptrons (MLPs) or feedforward NNs (FNNs) with many hidden layers (already containing the now popular multiplicative gates) [DEEP1-2][DL1-2][DLH][WHO5]. A paper of 1971 [DEEP2] described a deep learning net with 8 layers, trained by their highly cited method which was still popular in the new millennium [DL2]. Given a training set of input vectors with corresponding target output vectors, layers are incrementally grown and trained by regression analysis. In a fine-tuning phase, superfluous hidden units are pruned through regularisation with the help of a separate validation set [DEEP2][DLH]. This simplifies the net and improves its generalization on unseen test data. The numbers of layers and units per layer are learned in problem-dependent fashion. This is a powerful generalization of the original 2-layer Gauss-Legendre NN (1795-1805) [DLH]. That is, Ivakhnenko and colleagues had connectionism with adaptive hidden layers two decades before the name "connectionism" became popular in the 1980s. Like later deep NNs, his nets learned to create hierarchical, distributed, internal representations of incoming data. He did not call them deep learning NNs, but that's what they were. Ivakhnenko's pioneering work was repeatedly plagiarized by researchers who went on to share a Turing award [DLP][NOB]. For example, the depth of Ivakhnenko's 1971 layer-wise training [DEEP2] was comparable to the depth of Hinton's and Bengio's 2006 layer-wise training published 35 years later [UN4][UN5] without comparison to the original work [NOB] — done when compute was millions of times more expensive. Similarly, LeCun et al. [LEC89] published NN pruning techniques without referring to Ivakhnenko's original work on pruning deep NNs. Even in their much later "surveys" of deep learning [DL3][DL3a], the awardees failed to mention the very origins of deep learning [DLP][NOB]. Ivakhnenko & Lapa also demonstrated that it is possible to learn appropriate weights for hidden units using only locally available information without requiring a biologically implausible backward pass [BP4]. Six decades later, Hinton later attributed this achievement to himself [NOB25a]. How do Ivakhnenko's nets compare to even earlier multilayer feedforward nets without deep learning? In 1958, Frank Rosenblatt studied multilayer perceptrons (MLPs) [R58]. His MLPs had a non-learning first layer with randomized weights and an adaptive output layer. This was not yet deep learning, because only the last layer learned [DL1]. MLPs were also discussed in 1961 by Karl Steinbuch [ST61-95] and Roger David Joseph [R61]. See also Oliver Selfridge's multilayer Pandemonium [SE59] (1959). In 1962, Rosenblatt et al. even wrote about "back-propagating errors" in an MLP with a hidden layer [R62], following Joseph's 1961 preliminary ideas about training hidden units [R61], but Joseph & Rosenblatt had no working deep learning algorithm for deep MLPs. What's now called backpropagation is quite different and was first published in 1970 by Seppo Linnainmaa [BP1-4]. Why did deep learning emerge in the USSR in the mid 1960s? Back then, the country was leading many important fields of science and technology, most notably in space: first satellite (1957), first man-made object on a heavenly body (1959), first man in space (1961), first woman in space (1962), first robot landing on a heavenly body (1965), first robot on another planet (1970). The USSR also detonated the world's biggest bomb ever (1961), and was home of many leading mathematicians, with sufficient funding for blue skies math research whose enormous significance would emerge only several decades later. The other sections mentioned above (1967, 1970, 1991-93, 1991-2015, 1996) are covered by [WHO5]: Who invented deep learning? Technical Note IDSIA-16-25, IDSIA, Nov 2025. SELECTED REFERENCES (many additional references in [WHO5] - see link above): [BP1] S. Linnainmaa. The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 1970. See chapters 6-7 and FORTRAN code on pages 58-60. See also BIT 16, 146-160, 1976. Link. The first publication on "modern" backpropagation, also known as the reverse mode of automatic differentiation. [BP4] J. Schmidhuber (2014). Who invented backpropagation? [BPA] H. J. Kelley. Gradient Theory of Optimal Flight Paths. ARS Journal, Vol. 30, No. 10, pp. 947-954, 1960. Precursor of modern backpropagation [BP1-4]. [DEEP1] Ivakhnenko, A. G. and Lapa, V. G. (1965). Cybernetic Predicting Devices. CCM Information Corporation. First working Deep Learners with many layers, learning internal representations. [DEEP1a] Ivakhnenko, Alexey Grigorevich. The group method of data of handling; a rival of the method of stochastic approximation. Soviet Automatic Control 13 (1968): 43-55. [DEEP2] Ivakhnenko, A. G. (1971). Polynomial theory of complex systems. IEEE Transactions on Systems, Man and Cybernetics, (4):364-378. [DL1] J. Schmidhuber, 2015. Deep learning in neural networks: An overview. Neural Networks, 61, 85-117. Got the first Best Paper Award ever issued by the journal Neural Networks, founded in 1988. [DL2] J. Schmidhuber, 2015. Deep Learning. Scholarpedia, 10(11):32832. [DL3] Y. LeCun, Y. Bengio, G. Hinton (2015). Deep Learning. Nature 521, 436-444. A "survey" of deep learning that does not mention the pioneering works of deep learning [DLP][NOB]. [DL3a] Y. Bengio, Y. LeCun, G. Hinton (2021). Turing Lecture: Deep Learning for AI. Communications of the ACM, July 2021. Another "survey" of deep learning that does not mention the pioneering works of deep learning [DLP][NOB]. [DLH] J. Schmidhuber. Annotated History of Modern AI and Deep Learning. Technical Report IDSIA-22-22, IDSIA, Lugano, Switzerland, 2022. Preprint arXiv:2212.11279. [DLP] J. Schmidhuber. How 3 Turing awardees republished key methods and ideas whose creators they failed to credit. Technical Report IDSIA-23-23, Swiss AI Lab IDSIA, 2023. [GD'] C. Lemarechal. Cauchy and the Gradient Method. Doc Math Extra, pp. 251-254, 2012. [GD''] J. Hadamard. Memoire sur le probleme d'analyse relatif a Vequilibre des plaques elastiques encastrees. Memoires presentes par divers savants estrangers à l'Academie des Sciences de l'Institut de France, 33, 1908. [GDa] Y. Z. Tsypkin (1966). Adaptation, training and self-organization automatic control systems, Avtomatika I Telemekhanika, 27, 23-61. On gradient descent-based on-line learning for non-linear systems. [GDb] Y. Z. Tsypkin (1971). Adaptation and Learning in Automatic Systems, Academic Press, 1971. On gradient descent-based on-line learning for non-linear systems. [GD1] S. I. Amari (1967). A theory of adaptive pattern classifier, IEEE Trans, EC-16, 279-307 (Japanese version published in 1965). Probably the first paper on using stochastic gradient descent [STO51-52] for learning in multilayer neural networks (without specifying the specific gradient descent method now known as reverse mode of automatic differentiation or backpropagation [BP1]). [GD2] S. I. Amari (1968). Information Theory—Geometric Theory of Information, Kyoritsu Publ., 1968 (in Japanese, see pages 119-120). Contains computer simulation results for a five layer network (with 2 modifiable layers) which learns internal representations to classify non-linearily separable pattern classes. [GD2a] S. Saito (1967). Master's thesis, Graduate School of Engineering, Kyushu University, Japan. Implementation of Amari's 1967 stochastic gradient descent method for multilayer perceptrons [GD1]. (S. Amari, personal communication, 2021.) [NOB] J. Schmidhuber. A Nobel Prize for Plagiarism. Technical Report IDSIA-24-24 (7 Dec 2024, updated Oct 2025). [NOB25a] G. Hinton. Nobel Lecture: Boltzmann machines. Rev. Mod. Phys. 97, 030502, 25 August 2025. One of the many problematic statements in this lecture is this: "Boltzmann machines are no longer used, but they were historically important [...] In the 1980s, they demonstrated that it was possible to learn appropriate weights for hidden neurons using only locally available information without requiring a biologically implausible backward pass." Again, Hinton fails to mention Ivakhnenko who had shown this 2 decades earlier in the 1960s [DEEP1-2]. [WHO5] J. Schmidhuber (AI Blog, 2025). Who invented deep learning? Technical Note IDSIA-16-25, IDSIA, Nov 2025. See link above.

Loading thread detail

Fetching the original tweets from X for a clean reading view.

Hang tight—this usually only takes a few seconds.