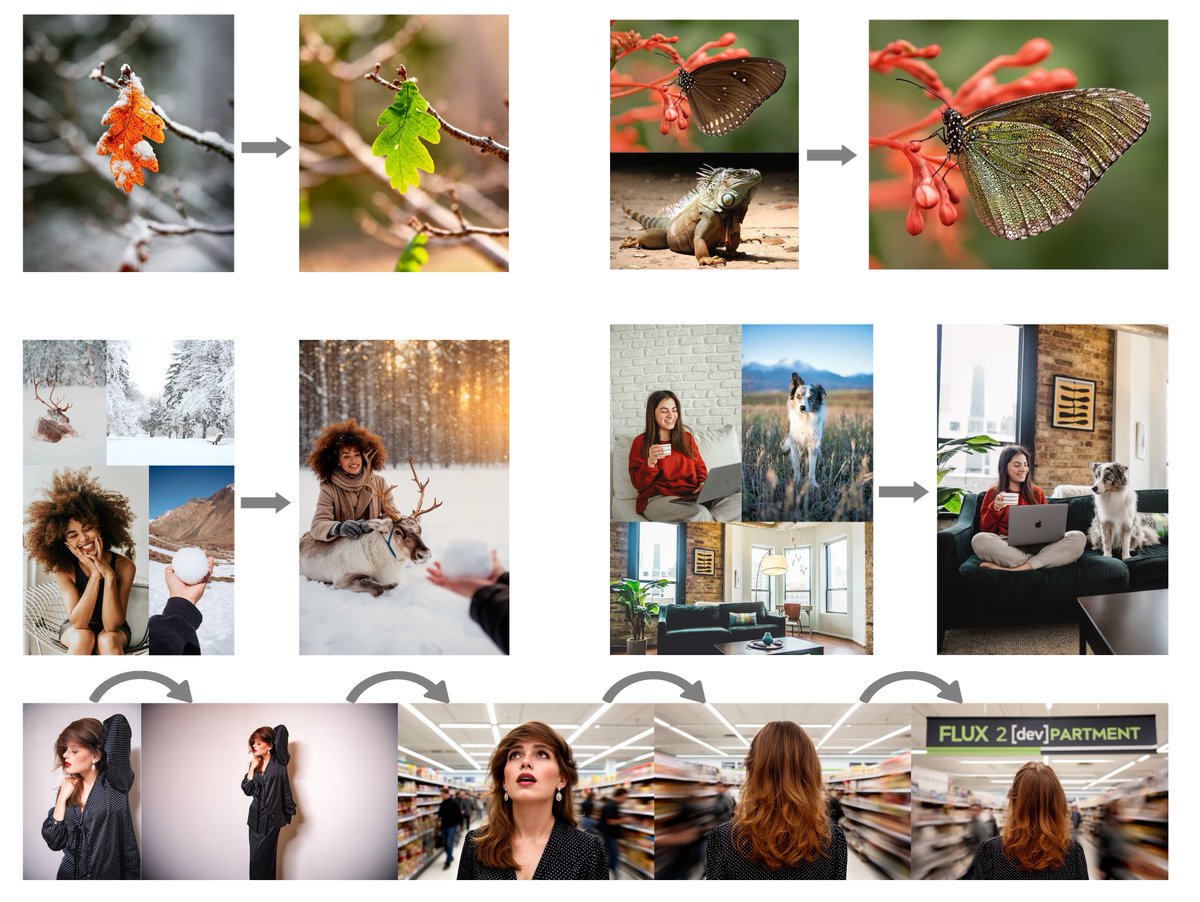

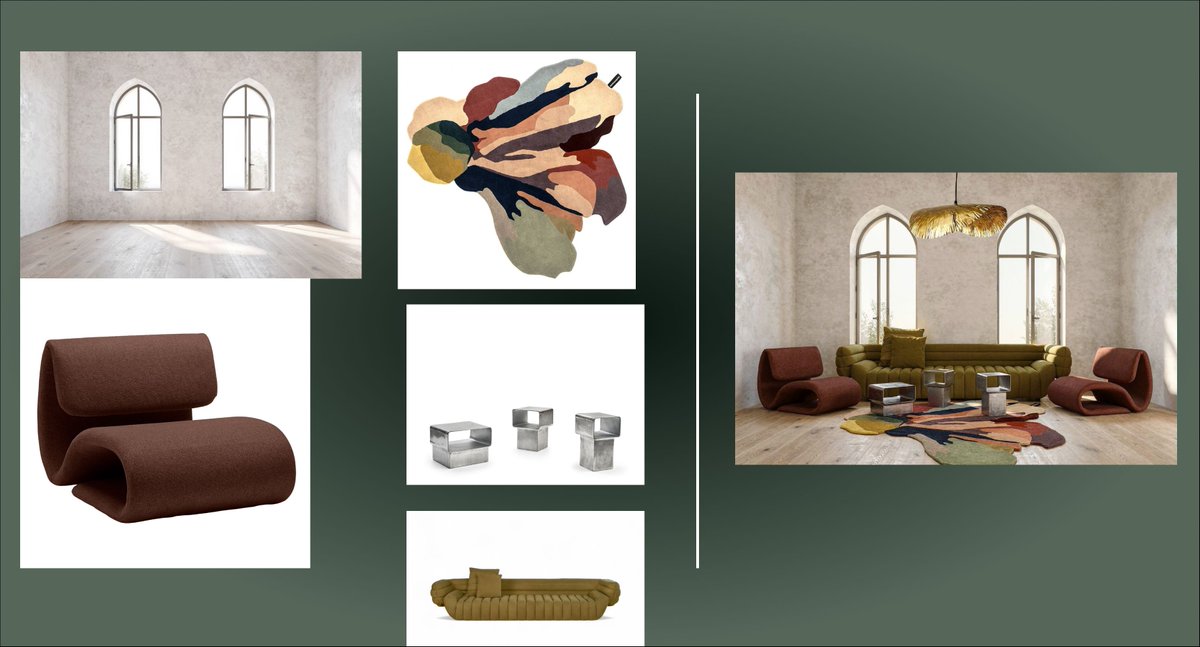

Black Forest Labs releases FLUX.2, still open source! It supports text-to-image generation, multi-image reference, and image editing, and significantly improves text generation and prompt word adherence capabilities. The specific model capabilities include: - Refer to up to 10 images at a time for optimal consistency. - Richer details, clearer textures, and more stable lighting. - Text rendering in complex typography, infographics, emojis, and user interfaces - Improved performance in following complex, structured instructions - Significantly more grounded in real-world knowledge, lighting, and spatial logic. - Supports image editing up to 4MP resolution Four model versions were released this time: FLUX.2 [pro]: State-of-the-art image quality comparable to the best closed models, offering similar cue compliance and visual realism to other models, while generating images faster and at a lower cost. Achieve both speed and quality. FLUX.2 [flex]: Allows developers to control model parameters such as step count and guidance strength, giving them complete control over quality, cue compliance, and speed. This model excels in rendering text and detail. FLUX.2 [dev]: A 32B open-weight model derived from the FLUX.2 base model. Currently the most powerful open-source image generation and editing model, combining text-to-image synthesis and multi-input image editing into a single model. FLUX.2 [klein] (coming soon): An open-source, Apache 2.0 licensed model, a distilled version of the FLUX.2 base model. More powerful and easier for developers to use than comparable models of the same size trained from scratch. FLUX.2 - VAE: A novel variational autoencoder for latent representations that provides an optimized trade-off between learnability, quality, and compression rate.

Multi-image reference and image editing