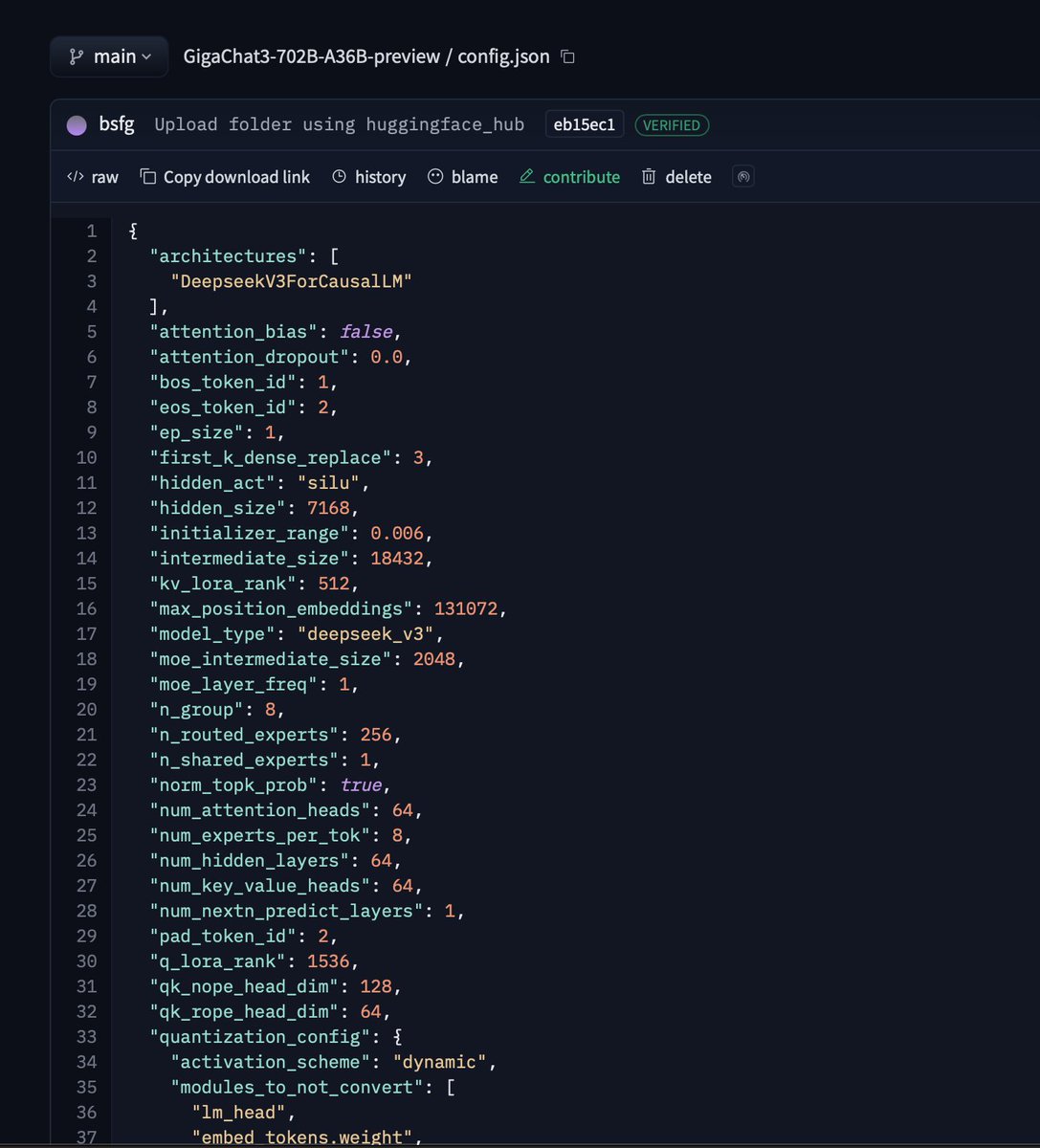

Russian Sberbank has scaled its "bruh just copy DeepSeek" paradigm to Gigachat 3 Ultra 702B-A36B. Very little info, except 5.5T synthetic tokens. > Больше подробностей в хабр статье (to do). lmao. Well, that's something. Not many Western labs have done even this much.

cute dumb 10B 1.8AB DeepSeek too huggingface.co/ai-sage/GigaCh… I think they can improve from that, once they smuggle/rent more compute and polish their post-training. RN just finetuning DS would have been better and they've even invested in interpretability for DS-MoEs. Matter of will